Hello readers, we're going to go over the following sections:

- Create Lambda Function Using Terraform

- Create API Gateway Using Terraform

- Create Lambda Function with Dependencies & Access to S3 Bucket

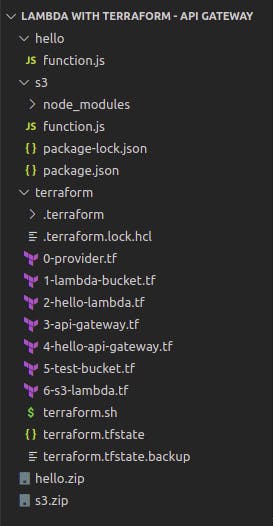

Before we get started this is how are final directory will look like

now, let get started

1. Create Lambda Function Using Terraform¶

First of all, let's create a AWS Lambda function. I will use NodeJS runtime for the first example, but the steps are almost identical for all languages.

hello/function.js

exports.handler = async (event) => {

console.log('Event: ', event);

let responseMessage = 'Hello, World!';

if (event.queryStringParameters && event.queryStringParameters['Name']) {

responseMessage = 'Hello, ' + event.queryStringParameters['Name'] + '!';

}

if (event.httpMethod === 'POST') {

const body = JSON.parse(event.body);

responseMessage = 'Hello, ' + body.name + '!';

}

const response = {

statusCode: 200,

headers: {

'Content-Type': 'application/json',

},

body: JSON.stringify({

message: responseMessage

}),

};

return response;

};

Next is terraform code. First of all, we need to declare version constraints for the different providers and terraform cli itself, as well as we need to declare aws provider and specify the AWS region where we are going to create S3 buckets and Lambda functions.

terraform/0-provider.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.21.0"

}

random = {

source = "hashicorp/random"

version = "~> 3.3.0"

}

archive = {

source = "hashicorp/archive"

version = "~> 2.2.0"

}

}

required_version = "~> 1.0"

}

provider "aws" {

region = "us-east-1"

}

Since S3 bucket names must be unique, we can use a random pet generator to append it to the bucket name. Then let's create the S3 bucket itself with the generated name. You can also block all public access to this bucket, which is my default setting.

terraform/1-lambda-bucket.tf

resource "random_pet" "lambda_bucket_name" {

prefix = "lambda"

length = 2

}

resource "aws_s3_bucket" "lambda_bucket" {

bucket = random_pet.lambda_bucket_name.id

force_destroy = true

}

resource "aws_s3_bucket_public_access_block" "lambda_bucket" {

bucket = aws_s3_bucket.lambda_bucket.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

The following file will contain the Lambda terraform code. As with most of the AWS managed services, Lambda would require access to other AWS services such as CloudWatch to write logs, or in the following example, we will grant access to the S3 bucket to read a file.

terraform/2-hello-lambda.tf

resource "aws_iam_role" "hello_lambda_exec" {

name = "hello-lambda"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "hello_lambda_policy" {

role = aws_iam_role.hello_lambda_exec.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole"

}

resource "aws_lambda_function" "hello" {

function_name = "hello"

s3_bucket = aws_s3_bucket.lambda_bucket.id

s3_key = aws_s3_object.lambda_hello.key

runtime = "nodejs16.x"

handler = "function.handler"

source_code_hash = data.archive_file.lambda_hello.output_base64sha256

role = aws_iam_role.hello_lambda_exec.arn

}

resource "aws_cloudwatch_log_group" "hello" {

name = "/aws/lambda/${aws_lambda_function.hello.function_name}"

retention_in_days = 14

}

data "archive_file" "lambda_hello" {

type = "zip"

source_dir = "../${path.module}/hello"

output_path = "../${path.module}/hello.zip"

}

resource "aws_s3_object" "lambda_hello" {

bucket = aws_s3_bucket.lambda_bucket.id

key = "hello.zip"

source = data.archive_file.lambda_hello.output_path

etag = filemd5(data.archive_file.lambda_hello.output_path)

}

We're ready to initialize terraform and apply it.

terraform init

terraform apply

Since we don't have API Gateway just yet, let's invoke this function with the aws lambda invoke-command.

aws lambda invoke --region=us-east-1 --function-name=hello response.json

If you print the response, you should see the message Hello World!

cat response.json

Create API Gateway Using Terraform¶

The next step is to create API Gateway using terraform and integrate it with our Lambda.

terraform/3-api-gateway.tf

resource "aws_apigatewayv2_api" "main" {

name = "main"

protocol_type = "HTTP"

}

resource "aws_apigatewayv2_stage" "dev" {

api_id = aws_apigatewayv2_api.main.id

name = "dev"

auto_deploy = true

access_log_settings {

destination_arn = aws_cloudwatch_log_group.main_api_gw.arn

format = jsonencode({

requestId = "$context.requestId"

sourceIp = "$context.identity.sourceIp"

requestTime = "$context.requestTime"

protocol = "$context.protocol"

httpMethod = "$context.httpMethod"

resourcePath = "$context.resourcePath"

routeKey = "$context.routeKey"

status = "$context.status"

responseLength = "$context.responseLength"

integrationErrorMessage = "$context.integrationErrorMessage"

}

)

}

}

resource "aws_cloudwatch_log_group" "main_api_gw" {

name = "/aws/api-gw/${aws_apigatewayv2_api.main.name}"

retention_in_days = 14

}

Next, we need to create the API Gateway stage. One of the ways to think about stages as different environments such as dev, staging, or production. Some people use it to version their APIs. Let's call our stage dev and enable auto-deploy.

terraform/4-hello-api-gateway.tf

resource "aws_apigatewayv2_integration" "lambda_hello" {

api_id = aws_apigatewayv2_api.main.id

integration_uri = aws_lambda_function.hello.invoke_arn

integration_type = "AWS_PROXY"

integration_method = "POST"

}

resource "aws_apigatewayv2_route" "get_hello" {

api_id = aws_apigatewayv2_api.main.id

route_key = "GET /hello"

target = "integrations/${aws_apigatewayv2_integration.lambda_hello.id}"

}

resource "aws_apigatewayv2_route" "post_hello" {

api_id = aws_apigatewayv2_api.main.id

route_key = "POST /hello"

target = "integrations/${aws_apigatewayv2_integration.lambda_hello.id}"

}

resource "aws_lambda_permission" "api_gw" {

statement_id = "AllowExecutionFromAPIGateway"

action = "lambda:InvokeFunction"

function_name = aws_lambda_function.hello.function_name

principal = "apigateway.amazonaws.com"

source_arn = "${aws_apigatewayv2_api.main.execution_arn}/*/*"

}

output "hello_base_url" {

value = aws_apigatewayv2_stage.dev.invoke_url

}

Go back to the terminal and apply the terraform.

terraform apply

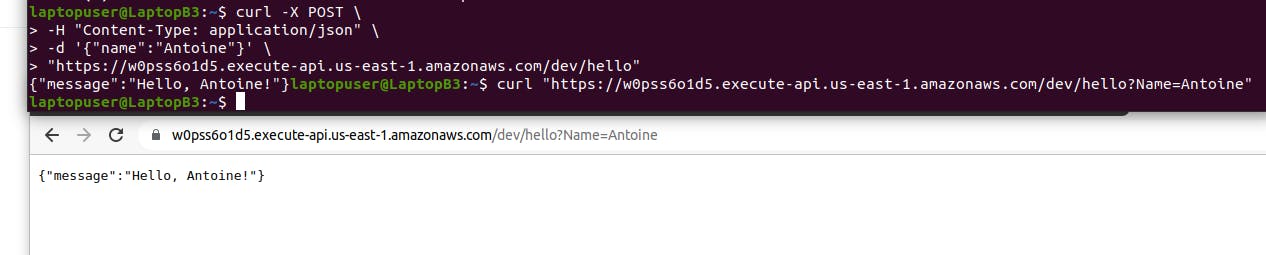

let's test the POST method. In this case, we provide a payload as a JSON object to the same hello endpoint.

curl -X POST \

-H "Content-Type: application/json" \

-d '{"name":"Antoine"}' \

"https://<id>.execute-api.us-east-1.amazonaws.com/dev/hello"

Also, let's test the HTTP GET method first, append the hello endpoint and optionally provide the URL parameter.

curl "https://<id>.execute-api.us-east-1.amazonaws.com/dev/hello?Name=Antoine"

Create Lambda Function with Dependencies & Access to S3 Bucket

In the final section of this video, let's create another lambda function with external dependencies. Also, let's grant access to this function to read a file in a new S3 bucket.

terraform/5-test-bucket.tf

resource "random_pet" "test_bucket_name" {

prefix = "test"

length = 2

}

resource "aws_s3_bucket" "test" {

bucket = random_pet.test_bucket_name.id

force_destroy = true

}

resource "aws_s3_bucket_public_access_block" "test" {

bucket = aws_s3_bucket.test.id

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

resource "aws_s3_object" "test" {

bucket = aws_s3_bucket.test.id

key = "hello.json"

content = jsonencode({ name = "S3" })

}

output "test_s3_bucket" {

value = random_pet.test_bucket_name.id

}

Now let's create a new aws lambda function in a new S3 folder.

s3/function.js

const aws = require('aws-sdk');

const s3 = new aws.S3({ apiVersion: '2006-03-01' });

exports.handler = async (event, context) => {

console.log('Received event:', JSON.stringify(event, null, 2));

const bucket = event.bucket;

const object = event.object;

const key = decodeURIComponent(object.replace(/\+/g, ' '));

const params = {

Bucket: bucket,

Key: key,

};

try {

const { Body } = await s3.getObject(params).promise();

const content = Body.toString('utf-8');

return content;

} catch (err) {

console.log(err);

const message = `Error getting object ${key} from bucket ${bucket}.`;

console.log(message);

throw new Error(message);

}

};

While in S3 folder do ''npm init'' and also npm install aws-sdk

That's all with the function; now we need to create Lambda with terraform.

terraform/6-s3-lambda.tf

resource "aws_iam_role" "s3_lambda_exec" {

name = "s3-lambda"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "lambda.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "s3_lambda_policy" {

role = aws_iam_role.s3_lambda_exec.name

policy_arn = "arn:aws:iam::aws:policy/service-role/AWSLambdaBasicExecutionRole"

}

resource "aws_iam_policy" "test_s3_bucket_access" {

name = "TestS3BucketAccess"

policy = jsonencode({

Version = "2012-10-17"

Statement = [

{

Action = [

"s3:GetObject",

]

Effect = "Allow"

Resource = "arn:aws:s3:::${aws_s3_bucket.test.id}/*"

},

]

})

}

resource "aws_iam_role_policy_attachment" "s3_lambda_test_s3_bucket_access" {

role = aws_iam_role.s3_lambda_exec.name

policy_arn = aws_iam_policy.test_s3_bucket_access.arn

}

resource "aws_lambda_function" "s3" {

function_name = "s3"

s3_bucket = aws_s3_bucket.lambda_bucket.id

s3_key = aws_s3_object.lambda_s3.key

runtime = "nodejs16.x"

handler = "function.handler"

source_code_hash = data.archive_file.lambda_s3.output_base64sha256

role = aws_iam_role.s3_lambda_exec.arn

}

resource "aws_cloudwatch_log_group" "s3" {

name = "/aws/lambda/${aws_lambda_function.s3.function_name}"

retention_in_days = 14

}

data "archive_file" "lambda_s3" {

type = "zip"

source_dir = "../${path.module}/s3"

output_path = "../${path.module}/s3.zip"

}

resource "aws_s3_object" "lambda_s3" {

bucket = aws_s3_bucket.lambda_bucket.id

key = "s3.zip"

source = data.archive_file.lambda_s3.output_path

source_hash = filemd5(data.archive_file.lambda_s3.output_path)

}

If you just want to deploy it from your local machine, you can create a simple wrapper around terraform. This would be an extremely simple script, just to give you an idea of how to build and deploy it.

terraform/terraform.sh

#!/bin/sh

set -e

cd ../s3

npm ci

cd ../terraform

terraform apply

Let's make this script executable.

chmod +x terraform.sh

Finally, let's run it.

./terraform.sh

We can invoke this new s3 function and provide a json payload with the bucket name and the object.

aws lambda invoke \

--region=us-east-1 \

--function-name=s3 \

--cli-binary-format raw-in-base64-out \

--payload '{"bucket":"test-<your>-<name>","object":"hello.json"}' \

response.json

If you print the response, you should see "{\"name\":\"S3\"}"

cat response.json