Hello readers, in today's article we are going to deploy an application to Kubernetes in AWS and access it via AWS API Gateway.

First, we will create AWS VPC and EKS Cluster Using Terraform. Then we will deploy a simple app to Kubernetes and expose it using a private network load balancer. We also need to create an API Gateway using terraform. And finally, I'll show you how to integrate API Gateway with Amazon EKS.

1 - Create EKS Cluster Using Terraform

let's create an EKS cluster and VPC from scratch.

In the provider, we can declare some version constraints as well as some variables such as the EKS cluster name and an EKS version.

terraform/0-provider.tf

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 4.0"

}

}

}

provider "aws" {

region = "us-east-1"

}

variable "cluster_name" {

default = "demo"

}

variable "cluster_version" {

default = "1.22"

}

Then we need a VPC. terraform/1-vpc.tf

resource "aws_vpc" "main" {

cidr_block = "10.0.0.0/16"

tags = {

Name = "main"

}

}

Internet gateway. terraform/2-igw.tf

resource "aws_internet_gateway" "igw" {

vpc_id = aws_vpc.main.id

tags = {

Name = "igw"

}

}

Four subnets, two private and two public. terraform/3-subnets.tf

resource "aws_subnet" "private-us-east-1a" {

vpc_id = aws_vpc.main.id

cidr_block = "10.0.0.0/19"

availability_zone = "us-east-1a"

tags = {

"Name" = "private-us-east-1a"

"kubernetes.io/role/internal-elb" = "1"

"kubernetes.io/cluster/${var.cluster_name}" = "owned"

}

}

resource "aws_subnet" "private-us-east-1b" {

vpc_id = aws_vpc.main.id

cidr_block = "10.0.32.0/19"

availability_zone = "us-east-1b"

tags = {

"Name" = "private-us-east-1b"

"kubernetes.io/role/internal-elb" = "1"

"kubernetes.io/cluster/${var.cluster_name}" = "owned"

}

}

resource "aws_subnet" "public-us-east-1a" {

vpc_id = aws_vpc.main.id

cidr_block = "10.0.64.0/19"

availability_zone = "us-east-1a"

map_public_ip_on_launch = true

tags = {

"Name" = "public-us-east-1a"

"kubernetes.io/role/elb" = "1"

"kubernetes.io/cluster/${var.cluster_name}" = "owned"

}

}

resource "aws_subnet" "public-us-east-1b" {

vpc_id = aws_vpc.main.id

cidr_block = "10.0.96.0/19"

availability_zone = "us-east-1b"

map_public_ip_on_launch = true

tags = {

"Name" = "public-us-east-1b"

"kubernetes.io/role/elb" = "1"

"kubernetes.io/cluster/${var.cluster_name}" = "owned"

}

}

NAT Gateway to provide internet access for private subnets. terraform/4-nat.tf

resource "aws_eip" "nat" {

vpc = true

tags = {

Name = "nat"

}

}

resource "aws_nat_gateway" "nat" {

allocation_id = aws_eip.nat.id

subnet_id = aws_subnet.public-us-east-1a.id

tags = {

Name = "nat"

}

depends_on = [aws_internet_gateway.igw]

}

Routes. terraform/5-routes.tf

resource "aws_route_table" "private" {

vpc_id = aws_vpc.main.id

route {

cidr_block = "0.0.0.0/0"

nat_gateway_id = aws_nat_gateway.nat.id

}

tags = {

Name = "private"

}

}

resource "aws_route_table" "public" {

vpc_id = aws_vpc.main.id

route {

cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.igw.id

}

tags = {

Name = "public"

}

}

resource "aws_route_table_association" "private-us-east-1a" {

subnet_id = aws_subnet.private-us-east-1a.id

route_table_id = aws_route_table.private.id

}

resource "aws_route_table_association" "private-us-east-1b" {

subnet_id = aws_subnet.private-us-east-1b.id

route_table_id = aws_route_table.private.id

}

resource "aws_route_table_association" "public-us-east-1a" {

subnet_id = aws_subnet.public-us-east-1a.id

route_table_id = aws_route_table.public.id

}

resource "aws_route_table_association" "public-us-east-1b" {

subnet_id = aws_subnet.public-us-east-1b.id

route_table_id = aws_route_table.public.id

}

Then the EKS cluster itself. terraform/6-eks.tf

resource "aws_iam_role" "eks-cluster" {

name = "eks-cluster-${var.cluster_name}"

assume_role_policy = <<POLICY

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "eks.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

POLICY

}

resource "aws_iam_role_policy_attachment" "amazon-eks-cluster-policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSClusterPolicy"

role = aws_iam_role.eks-cluster.name

}

resource "aws_eks_cluster" "cluster" {

name = var.cluster_name

version = var.cluster_version

role_arn = aws_iam_role.eks-cluster.arn

vpc_config {

subnet_ids = [

aws_subnet.private-us-east-1a.id,

aws_subnet.private-us-east-1b.id,

aws_subnet.public-us-east-1a.id,

aws_subnet.public-us-east-1b.id

]

}

depends_on = [aws_iam_role_policy_attachment.amazon-eks-cluster-policy]

}

Single node group with one node. terraform/7-nodes.tf

resource "aws_iam_role" "nodes" {

name = "eks-node-group-nodes"

assume_role_policy = jsonencode({

Statement = [{

Action = "sts:AssumeRole"

Effect = "Allow"

Principal = {

Service = "ec2.amazonaws.com"

}

}]

Version = "2012-10-17"

})

}

resource "aws_iam_role_policy_attachment" "amazon-eks-worker-node-policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKSWorkerNodePolicy"

role = aws_iam_role.nodes.name

}

resource "aws_iam_role_policy_attachment" "amazon-eks-cni-policy" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEKS_CNI_Policy"

role = aws_iam_role.nodes.name

}

resource "aws_iam_role_policy_attachment" "amazon-ec2-container-registry-read-only" {

policy_arn = "arn:aws:iam::aws:policy/AmazonEC2ContainerRegistryReadOnly"

role = aws_iam_role.nodes.name

}

resource "aws_eks_node_group" "private-nodes" {

cluster_name = aws_eks_cluster.cluster.name

version = var.cluster_version

node_group_name = "private-nodes"

node_role_arn = aws_iam_role.nodes.arn

subnet_ids = [

aws_subnet.private-us-east-1a.id,

aws_subnet.private-us-east-1b.id

]

capacity_type = "ON_DEMAND"

instance_types = ["t3.small"]

scaling_config {

desired_size = 1

max_size = 5

min_size = 0

}

update_config {

max_unavailable = 1

}

labels = {

role = "general"

}

depends_on = [

aws_iam_role_policy_attachment.amazon-eks-worker-node-policy,

aws_iam_role_policy_attachment.amazon-eks-cni-policy,

aws_iam_role_policy_attachment.amazon-ec2-container-registry-read-only,

]

# Allow external changes without Terraform plan difference

lifecycle {

ignore_changes = [scaling_config[0].desired_size]

}

}

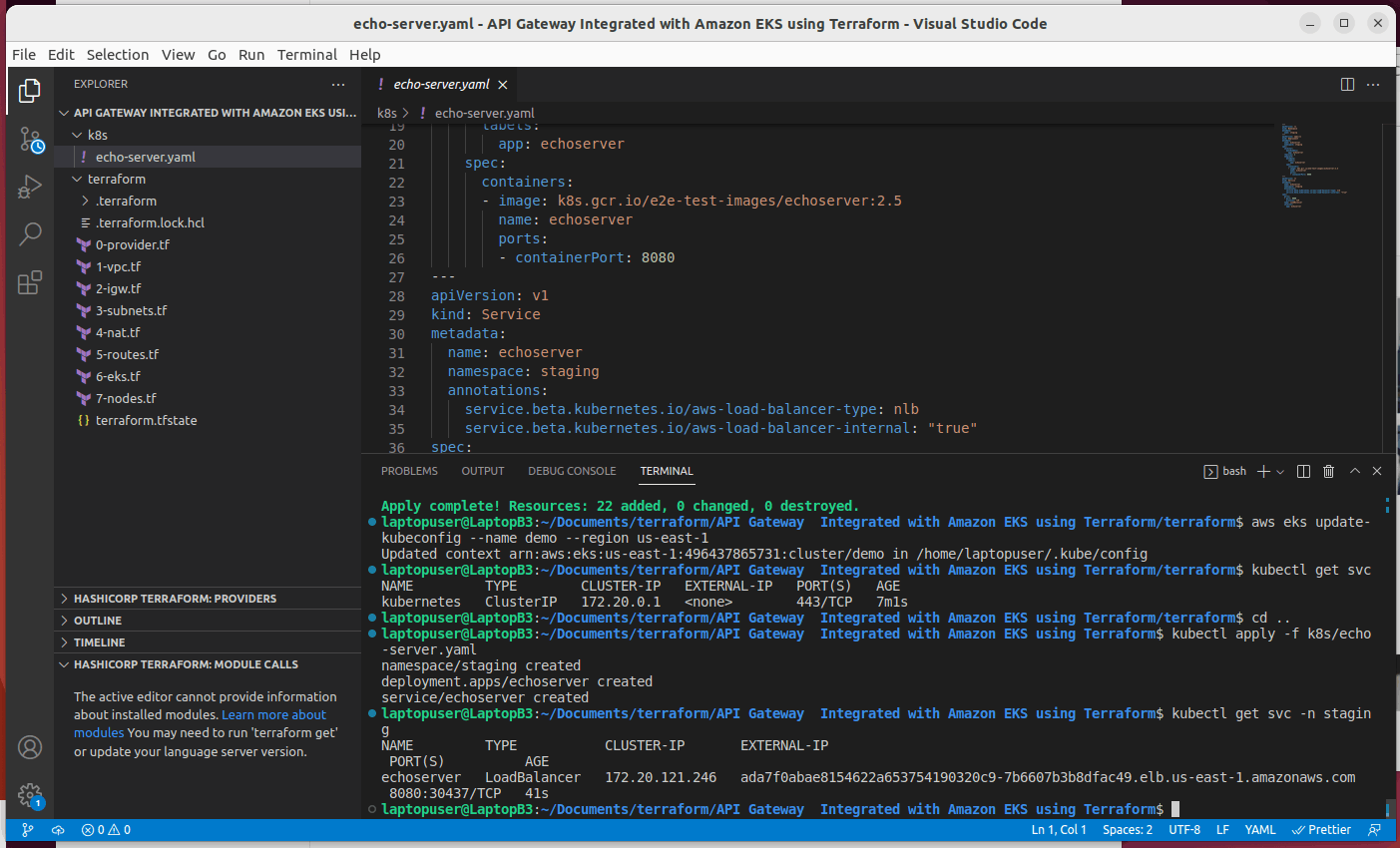

Now let's create that VPC and a cluster using terraform apply command. As always, when you use terraform to create a cluster, you need to update your Kubernetes context manually.

terraform init

terraform apply

aws eks update-kubeconfig --name demo --region us-east-1

kubectl get svc

2 - Deploy App to Kubernetes and Expose It with NLB

Next, we need to deploy an app to Kubernetes and expose it with Network Load Balancer. You don't need to deploy the AWS Load balancer controller for that; you can just use annotations.

We're going to deploy this app to the staging namespace. This deployment is based on a simple echoserver that was built for debugging purposes. And service to expose this app to other applications and services only within our VPC. By default, Kubernetes creates a classic load balancer to change it to a network load balancer use "nlb" annotation. Also, since we will integrate this service using aws private link with API Gateway, we don't need to expose it to the internet.

---

apiVersion: v1

kind: Namespace

metadata:

name: staging

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echoserver

namespace: staging

spec:

selector:

matchLabels:

app: echoserver

replicas: 1

template:

metadata:

labels:

app: echoserver

spec:

containers:

- image: k8s.gcr.io/e2e-test-images/echoserver:2.5

name: echoserver

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: echoserver

namespace: staging

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: nlb

service.beta.kubernetes.io/aws-load-balancer-internal: "true"

spec:

ports:

- port: 8080

protocol: TCP

type: LoadBalancer

selector:

app: echoserver

Let's go ahead and apply this app.

kubectl apply -f k8s/echo-server.yaml

If you get services in the staging namespace, you should find the hostname of the load balancer, take a note, or copy it.

kubectl get svc -n staging

3 - Integrate API Gateway with Amazon EKS

Now create AWS API Gateway Using Terraform. We're going to be using API version 2. terraform/8-api-gateway.tf

resource "aws_apigatewayv2_api" "main" {

name = "main"

protocol_type = "HTTP"

}

resource "aws_apigatewayv2_stage" "dev" {

api_id = aws_apigatewayv2_api.main.id

name = "dev"

auto_deploy = true

}

Go back to the terminal and apply it.

terraform apply

Integrate API Gateway with Amazon EKS

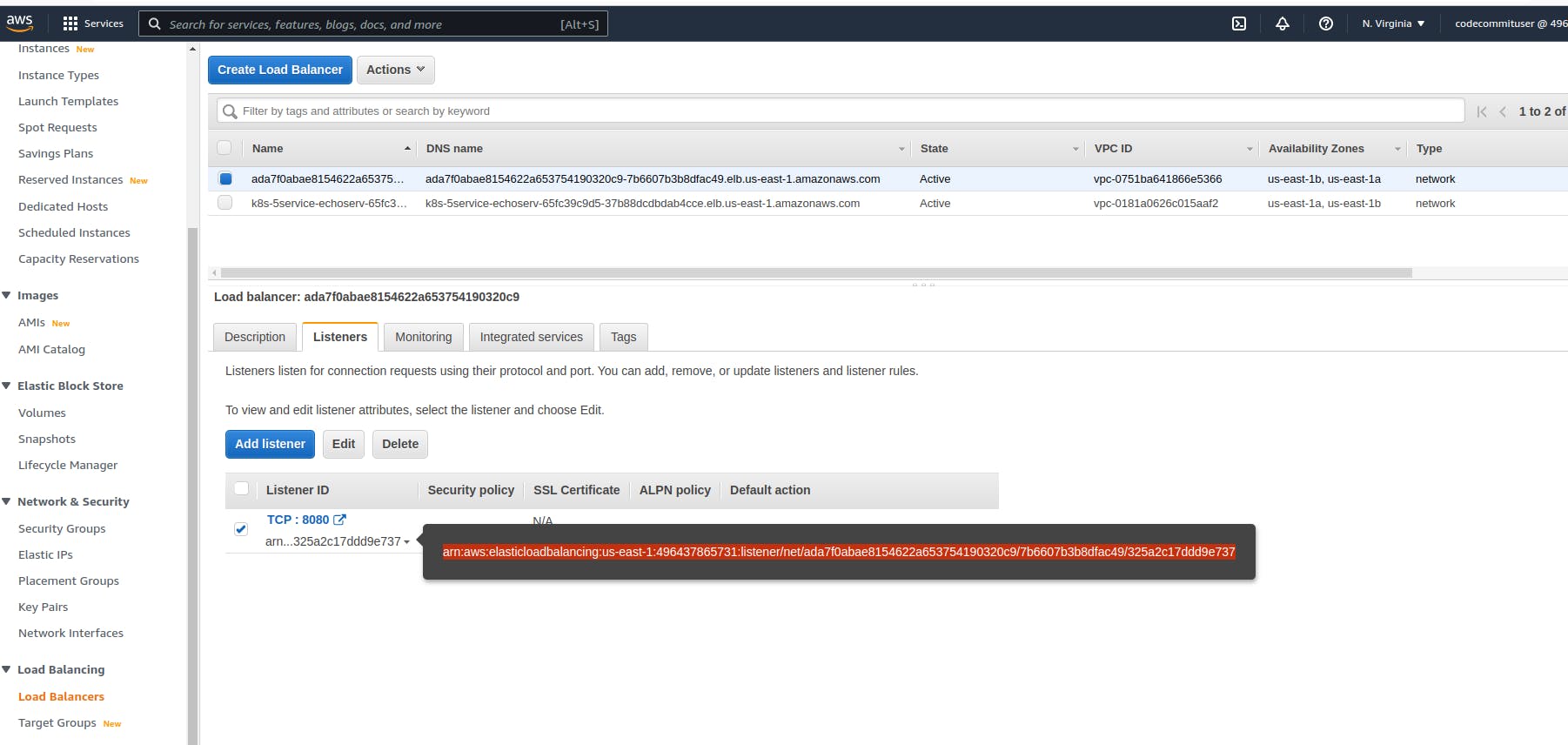

The final step is to integrate API Gateway with Amazon EKS. For integration, we need to create AWS private link, but before, we need to create a security group. We need to use the HTTP_PROXY type. For the integration method, you can specify HTTP methods such as GET/POST or allow all of them. The connection type is VPC_LINK, and specify the name of that link itself.

For integration URI, we need to specify the load balancer listener arn. You can find it in the aws load balancer section under listeners. Copy this long id and use it for URI.

The final resource that we need to declare is the route for API Gateway. You need to specify the api gateway id. Then the HTTP method and URL path, and finally, the integration id of the load balancer listener.

terraform/9-integration.tf

resource "aws_security_group" "vpc_link" {

name = "vpc-link"

vpc_id = aws_vpc.main.id

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

}

resource "aws_apigatewayv2_vpc_link" "eks" {

name = "eks"

security_group_ids = [aws_security_group.vpc_link.id]

subnet_ids = [

aws_subnet.private-us-east-1a.id,

aws_subnet.private-us-east-1b.id

]

}

resource "aws_apigatewayv2_integration" "eks" {

api_id = aws_apigatewayv2_api.main.id

integration_uri = "arn:aws:elasticloadbalancing:us-east-1:496437865731:listener/net/ada7f0abae8154622a653754190320c9/7b6607b3b8dfac49/325a2c17ddd9e737"

integration_type = "HTTP_PROXY"

integration_method = "ANY"

connection_type = "VPC_LINK"

connection_id = aws_apigatewayv2_vpc_link.eks.id

}

resource "aws_apigatewayv2_route" "get_echo" {

api_id = aws_apigatewayv2_api.main.id

route_key = "GET /echo"

target = "integrations/${aws_apigatewayv2_integration.eks.id}"

}

output "hello_base_url" {

value = "${aws_apigatewayv2_stage.dev.invoke_url}/echo"

}

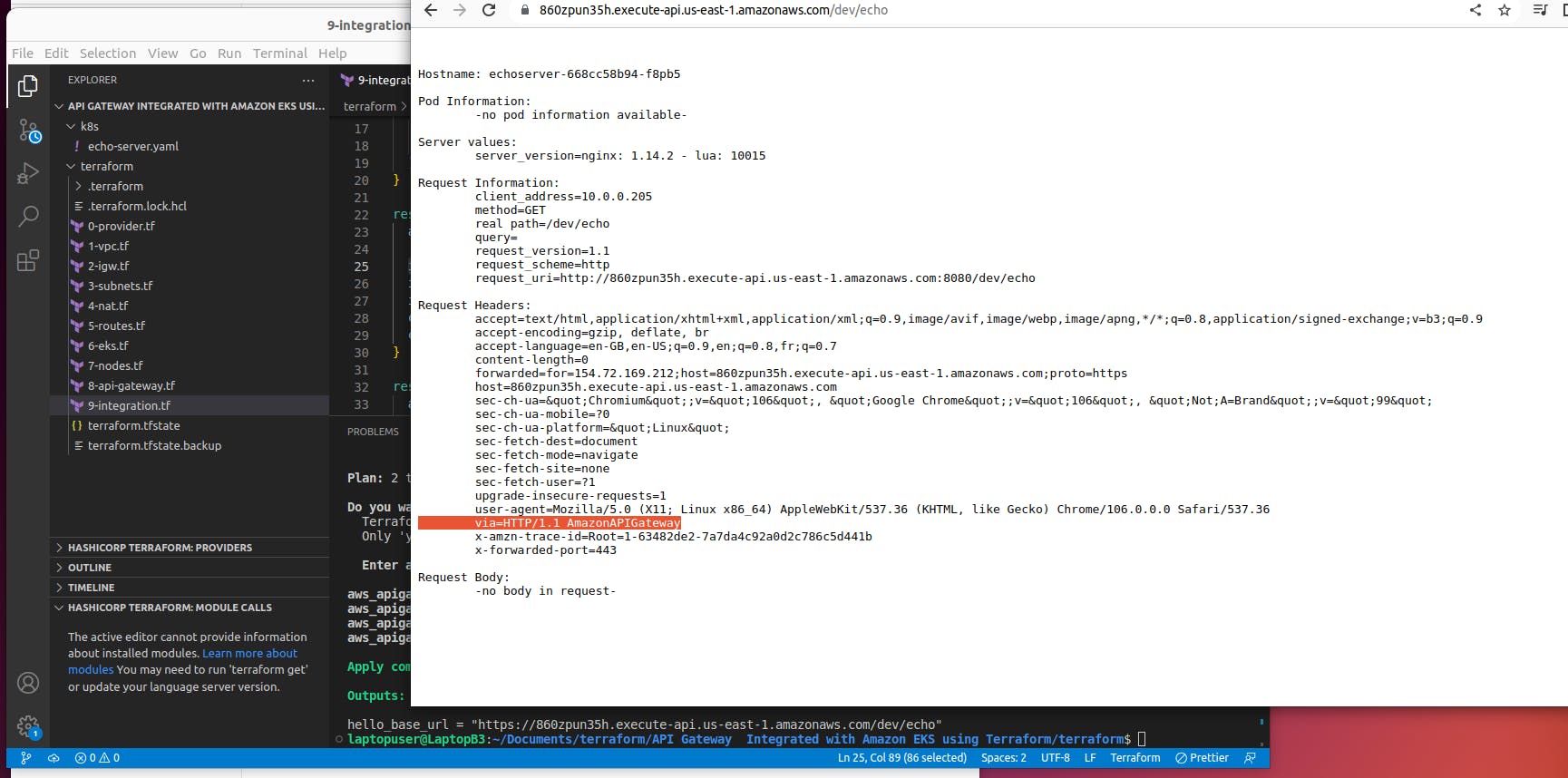

This is the last time when we run terraform.

terraform apply

Use curl to test integration.

curl https://<your-gw-id>.execute-api.us-east-1.amazonaws.com/dev/echo

Alright, it works; we were able to access the application running in the Kubernetes cluster via API Gateway and a Private link.

Thank you for readings if you want more articles like this, subscribe,